These days, whenever someone mentions “AI that translates by itself,” reactions are split: is there more excitement or more fear? The provocative title “Who’s Afraid of AI?” aims to spark reflection on what is truly at stake—beyond the illusion of “free and perfect translation.”

- The big opportunities (and why we shouldn’t demonize AI)

- Speed and throughput: for repetitive texts, large volumes, low-risk content (technical datasheets, FAQs, generic descriptions), AI-driven translation or content generation can cut time and cost.

- Language accessibility democratized: AI lowers language barriers, enabling even smaller budgets to get a “first draft” translation.

- Support for human experts: the ideal workflow combines AI + human review (post-editing), letting AI handle bulk and humans refine nuance, style, context.

- Feedback and learning loops: AI models evolve over time (training windows, feedback), and a well-managed deployment can yield long-term gains for client and vendor alike.

But these opportunities hold only if AI is not used “blindly.” That’s where the risks come in.

- When AI translates (or writes) too much — the major hazards

Here are the critical pitfalls when AI is deployed without guardrails:

- Hallucinations / “false facts”

AI may produce citations, sources, facts that are fabricated but presented as truth. This phenomenon is known as “hallucination” in language models.

➤ That’s exactly what happened with the Deloitte report: fabricated citations and nonexistent academic sources, plus a quote invented from a court judgment. - Contextual errors, ambiguity, and loss of nuance

AI struggles with sarcasm, double meanings, idioms, cultural references, brand tone.

In technical, legal, or scientific texts, a poorly translated term or ambiguous phrasing can dramatically shift meaning, with serious consequences. - Implicit bias and stereotypes

AI models are trained on large corpora containing cultural biases, stereotypes related to gender, class, ethnicity. These biases may surface in translation, e.g. by assigning gendered roles or choosing biased phrasing. - Privacy, security, and data handling

Uploading sensitive documents to AI systems (especially free or public cloud ones) carries risks that the data may be stored, reused, or used in training.

In legal, medical, contractual domains, such leaks can amount to legal liability or regulatory violations. - Technological dependence and loss of human touch

Over-automation may erode the critical oversight and human sensibility required to maintain style, tone, cultural resonance. Over time, content becomes flat, generic, bland. - Reputational and legal risk

A wrongly translated press release, contract clause, or website text published publicly can have high visibility. The trust of stakeholders, clients, partners may erode. In extreme cases, contractual or legal liability may arise.

- The Deloitte Australia case: a concrete cautionary tale

On October 6, 2025, it became public that Deloitte Australia would partially refund the Australian government after a 237-page report (AUD 440,000) contained serious errors — including fabricated quotes, false academic citations.

The original report has since been revised; Deloitte stated that AI usage did not alter the core findings, but acknowledged errors in citations and references.

This high-profile incident shows that even large, organized firms with review processes may misstep if they treat AI as a magic black box.

This example is highly illustrative for potential clients: we are not talking about hypothetical fears, but real reputational and financial stakes.

- Best practices for responsible AI use in translation and text generation

To ensure AI works for you, not against you, here are recommended guidelines (and practices we at InnovaLang endorse):

| Phase | Recommendation |

| Risk assessment | Classify text by criticality: low (FAQ, generic), medium (marketing, website) or high (legal, contracts, regulatory). |

| Technology selection | Use professional, customizable models with glossaries, term memory, not generic free tools lacking confidentiality guarantees. |

| Hybrid AI + human | Use AI only for draft stage; final version must be refined by experienced human translators. |

| Contextual verification | Cross-check references, citations, factual consistency, coherence, terminological consistency. |

| Glossaries & terminology memory | Embed client lexicons or terminologies to constrain AI to brand tone and consistent vocabulary. |

| Final quality control | Human checklist: terminological correctness, coherence, contextual sense, legal consistency, sources. |

| Data governance & confidentiality policies | Clear NDAs, confidentiality clauses, protocols for data handling, assurances of non-reuse for training. |

| Post-publication monitoring | Collect user feedback, track flagged errors, and update internal processes accordingly. |

In short: AI is a powerful enabler, but not autonomous when it comes to content that matters.

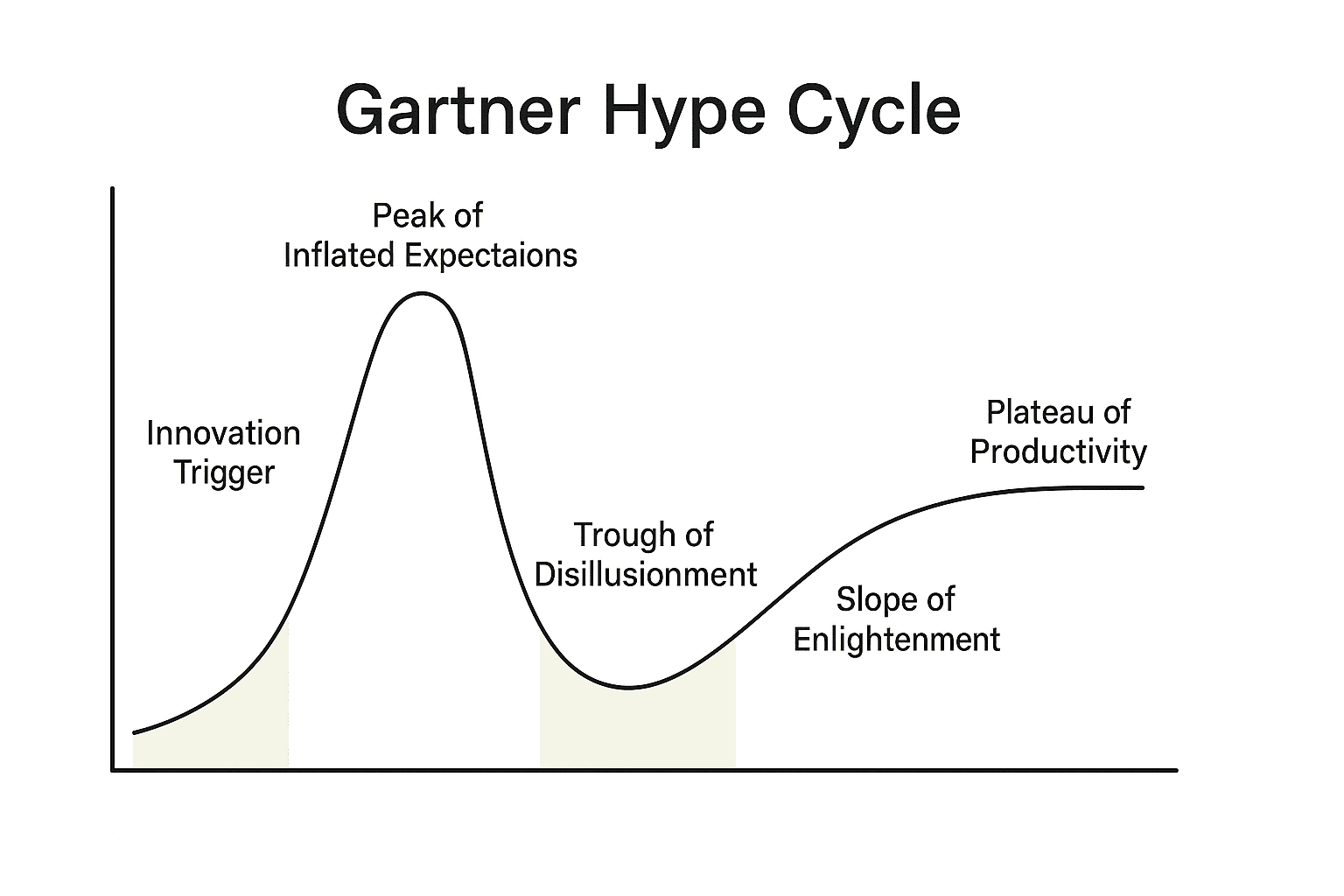

For further insights into the hype cycle and AI, see also our recent article on how enthusiasm around a new technology like this tends to evolve!

- Closing message (and call to action for your prospects)

While AI may seem a tempting shortcut, the reality is that, without expert oversight, it can backfire: hidden errors, hallucinations, loss of trust, reputational damage are real possibilities. The real question enterprises ought to ask is: “Who is correcting the AI?”

InnovaLang offers integrated solutions: AI-assisted translation with human supervision by seasoned translators, quality control, terminological consistency — so the client gets the speed advantage of AI without the risk burden.

For a free audit or consultation on how your organization uses (or risks misusing) AI in language services, contact us today.